Neural Internet

Reading some papers on the Neural Internet - a new technological advancement in brain computer interface (BCI) research, which enables locked-in patients to operate a Web browser directly with their brain potentials.

Reading some papers on the Neural Internet - a new technological advancement in brain computer interface (BCI) research, which enables locked-in patients to operate a Web browser directly with their brain potentials.Descartes - the man behind 'Cogito, ergo sum' - is also the name a system that uses neuronal signals from the brain and transforms them to binary or multi- dimensional computer commands enabling the patient to surf the Internet and read and send e-mails.

In Descartes (the system), the commands are arranged in a dichotomous decision tree based on a modified Huffman’s algorithm.

For those that really want to know how this is done, google it or here's a short list:

• Electroencephalographically (EEG)-controlled Web browser using SCPs, SMR/beta EEG-rhythms,

or P300 evoked potentials

• Invasive methods using electrocorticographic activity (ECoG),local field potentials or neuronal action potentials

• Metabolic brain activity measured by hemodynamic methods such as near infrared spectroscopy

But where is this going? Where's this going?

Although the functioning of the neural Internet and its clinical implications for motor impaired patients are highlighted, these techniques will bubble up to the common user within the next 15 years. That's right young moms and pops - your children (and maybe even some of you readers) will be able to access and maneuver within the Internet with thoughts alone.

The ultimate nextgen SecondLife app.

Future studies will have to investigate under which social conditions neural Internet can be offered to a wide range of users that do not suffer from any significant communication impairment. In general, it can be assumed that if a patient can achieve reliable control of any brain signal, which can be used as a binary or even as a multidimensional input signal for a BCI system, any standard Internet functionality can be implemented based on this signal.

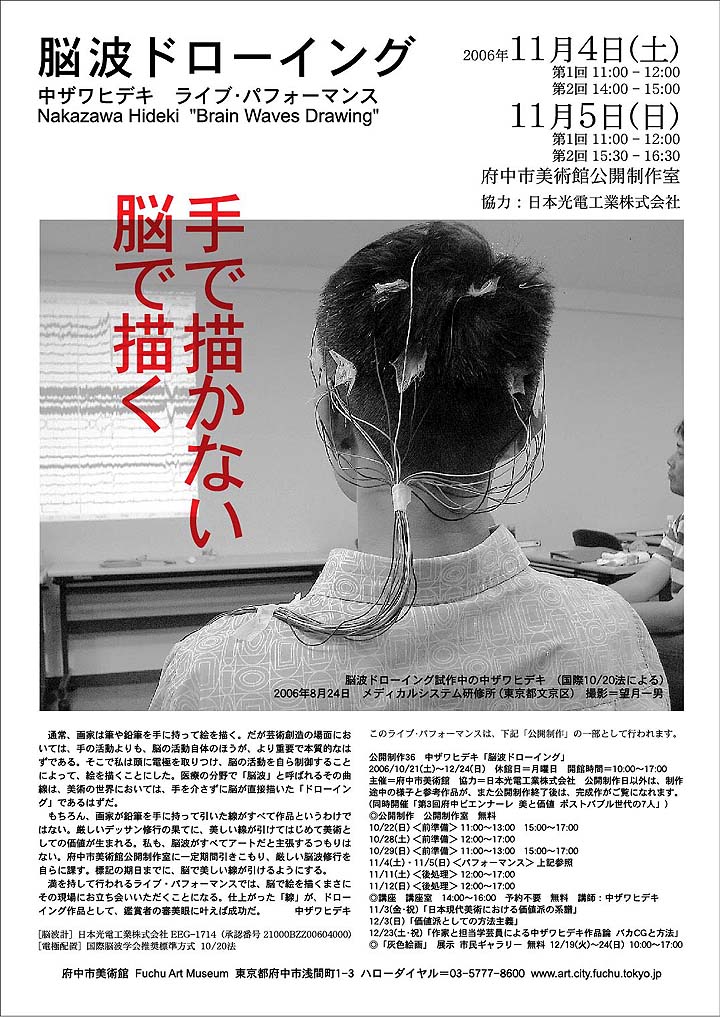

As seen above and on Nakazawa Hideki's homepage, applications are already being used in artistic and cultural examples.

Neat but for full fledged use on the Internet, this is in the long-term planning.

In the mid-term, the plan is that we will be using a combination of a voice/neural mash to navigate virtual space. Our minds are naturally able to do simple and routine 'transactions' or 'events' - while voice recognition apps manage the complicated commands.

Here's a scenario:

[voice] 'browser, url wired magazine'This complete set of instructions, with practice, would be done in under a minute - in a 'look ma, no hands' fashion.

[mind] (following retina reader) scroll down left hand navigation

[mind] (neuronic activity map) click on 'News'

[voice] 'search negroponte'

[mind] (retina reader) click 3rd search result

[mind] (retina reader) click on $100 laptop

[voice] 'bookmark, print and send url to JohnnyG'

[mind] (retina reader) find 'send',

[mind] (neuronic activity map) click 'Send'

[voice] 'back'

We should be planning for this - forget Web 2.0 - this is the new Internet - this is Web N+1

Tags: neural internet, voice recognition, social media, web n+1, descartes

Labels: descartes, Hideki, neural internet, voice recognition, Web N+1

Handbook for bloggers and cyber-dissidents (PDF 1.2 MB)

Handbook for bloggers and cyber-dissidents (PDF 1.2 MB)

1 Comments:

what a great tag: web n+1, for all the things to come, it does make a lot of sense! :)

Post a Comment

<< Home